Saihub.info

Safe and Responsible AI

Information Hub

In

The Safe and Responsible AI Information Hub (Saihub.info) aims to be the leading source of information on safe and responsible artificial intelligence, including AI regulation and governance, and technical solutions for AI safety. This emerging and rapidly changing field has huge implications for society, and our team and partners are deeply involved and tremendously interested in it.

We believe that AI offers huge potential benefits to society, and our aim is to enable those benefits while managing harm and risk. We agree with the eloquent and detailed case made by Yoshua Bengio for attention to AI safety notwithstanding the many benefits of AI. Dario Amodei (CEO of Anthropic, which most focused on risk among the main large language model providers) has also written at length about potential benefits of AI in the context of Anthropic's risk-focused approach.

There is much debate about which AI harms and risks deserve attention -- e.g. current harms vs. existential risk. We believe all potential harms are worthy of attention (although some are more serious than others). We aim to listen to all perspectives and present factual information, as well as intelligent analysis of AI safety issues.

The language of safe and responsible AI can be political -- "AI safety" tends to be associated with those working on existential risk and AI alignment, while "responsible AI" or "ethical AI" tend to be associated with those addressing current harms. We do not draw this distinction, and terms on this site should be assumed not to have political intent.

Our work is in early days. We aim for the information on this site to be accurate (and the opinions reasonable ones), but there may be mistakes or omissions. Most of the substantive pages of Saihub.info include an update date -- this does not mean that the information is complete or provide a guarantee that it is accurate as of that date, but it lets you know how recently we have reviewed the information.

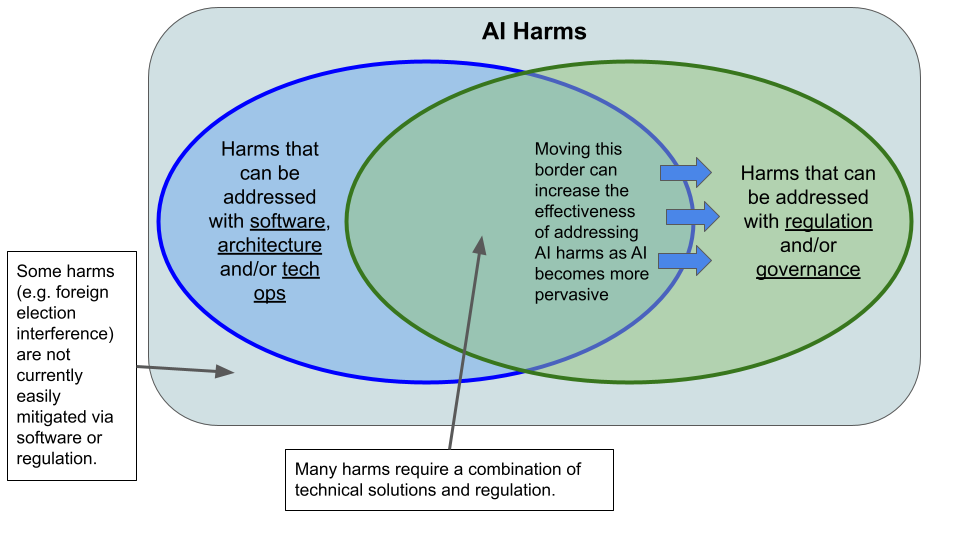

Saihub.info is organized in terms of harms, which can be addressed by AI regulatory and governance solutions, technical solutions and entities / funding. The Harms menu above includes sub-pages for (i) a harms register and (ii) detailed analysis of individual harms (currently only a few pages, but eventually one for each harm in the register). We draw a clear distinction between 'harms' and 'risks', as explained in our blog.

Here is an illustration of part of how we think about AI harms and their solutions.

Saihub.info is a project of Lily Innovation. Please see our Privacy Policy for information on how we use and protect your information.